Abstract

This presentation is Part 2 of my September Lisp NYC presentation on Reinforcement Learning and Artificial Neural Nets. We will continue from where we left off by covering Convolutional Neural Nets (CNN) and Recurrent Neural Nets (RNN) in depth.

Time permitting I also plan on having a few slides on each of the following topics:

1. Generative Adversarial Networks (GANs)

2. Differentiable Neural Computers (DNCs)

3. Deep Reinforcement Learning (DRL)

Some code examples will be provided in Clojure.

After a very brief recap of Part 1 (ANN & RL), we will jump right into CNN and their appropriateness for image recognition. We will start by covering the convolution operator. We will then explain feature maps and pooling operations and then explain the LeNet 5 architecture. The MNIST data will be used to illustrate a fully functioning CNN.

Next we cover Recurrent Neural Nets in depth and describe how they have been used in Natural Language Processing. We will explain why gated networks and LSTM are used in practice.

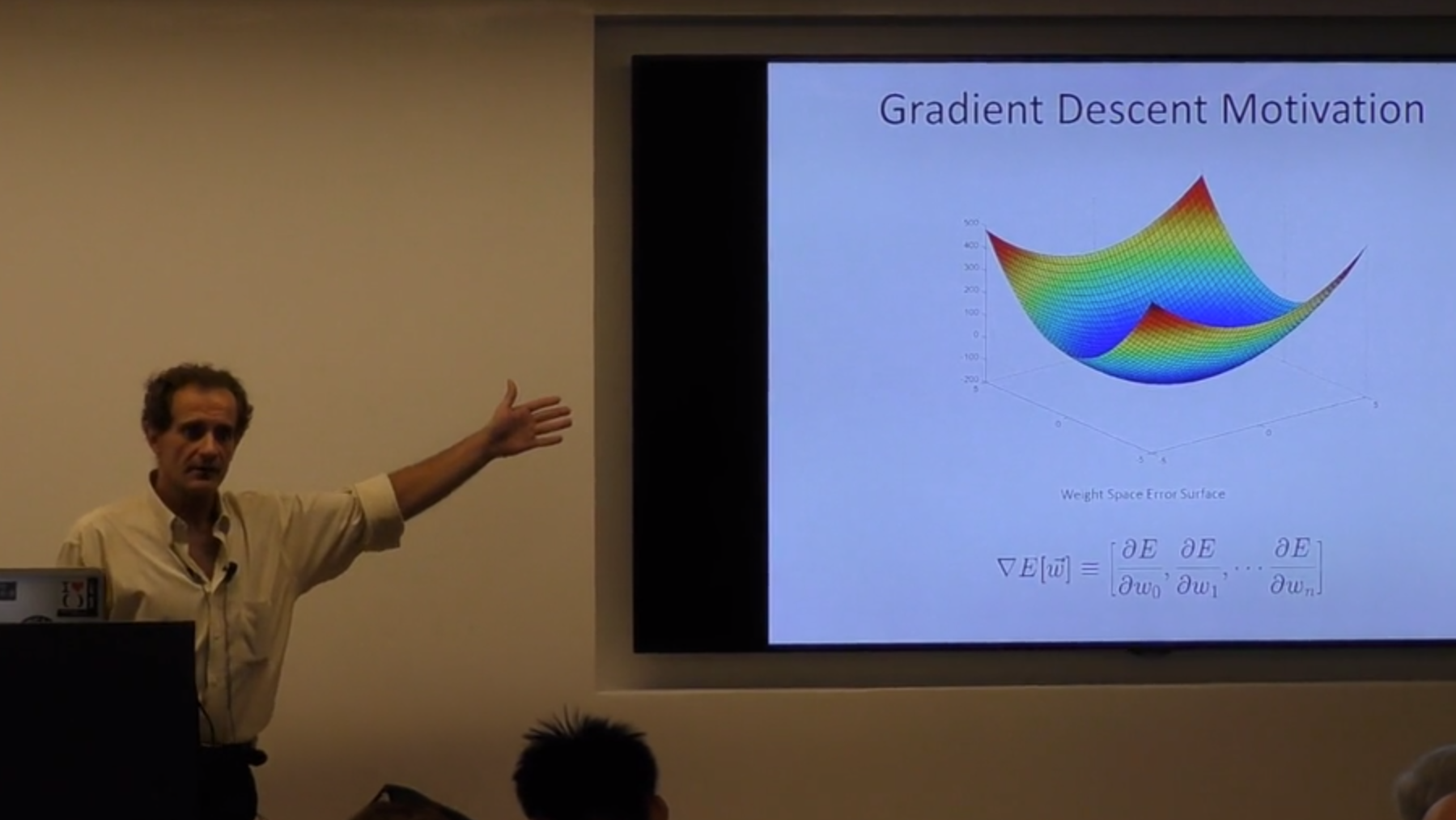

Please note that some exposure or familiarity with Gradient Descent and Backpropagation will be assumed. These are covered in the first part of the talk for which both video and slides are available online.

A lot of material will be drawn from the new Deep Learning book by Goodfellow & Bengio as well as Michael Nielsen's online book on Neural Networks and Deep Learning as well several other online resources.

Bio

Pierre de Lacaze has over 20 years industry experience with AI and Lisp based technologies. He holds a Bachelor of Science in Applied Mathematics and a Master’s Degree in Computer Science.

There are no comments. Be the first one!